SAMKHYA (सांख्य)

HIGH PERFORMANCE COMPUTING FACILITY

Overview

Samkhya (सांख्य), also referred to as Sankhya, Sāṃkhya, or Sāṅkhya, is a Sanskrit word that, depending on the context, means "to reckon, count, enumerate, calculate, deliberate, reason, reasoning by numeric enumeration, relating to number, rational.[1]"

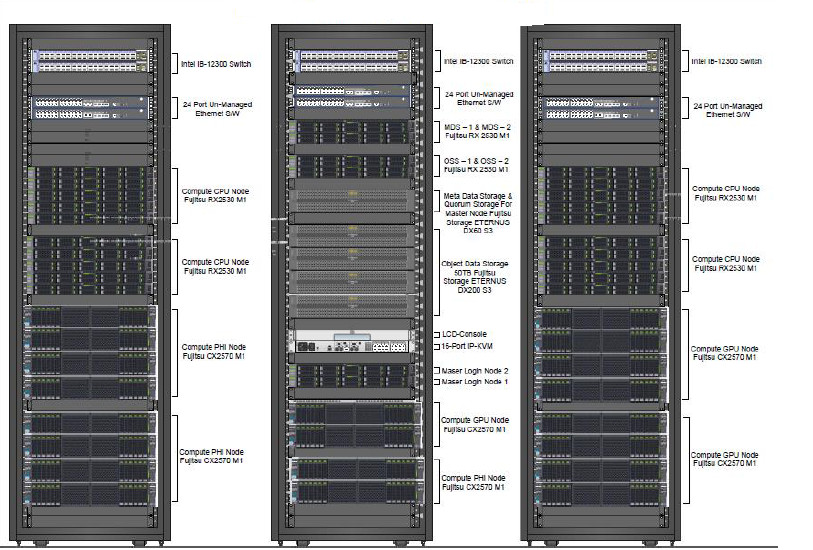

"SAMKHYA - High Performance Computing (HPC) Facility at IOP is a 222 TeraFLOPS hybrid environment which consists of CPU, NVIDIA Tesla GPU, Intel Xeon-Phi, QDR Infiniband interconnect and 7.5 TB of memory.

Samkhya has been featured in Top Supercomputers-India list since January 2018.

Link: January 2018 report

System overview:-

- 1440 Intel Haswell Processors

- 7.5 TB Memory

- 40 NVIDIA Tesla K80 cards

- 40 Intel Xeon Phi 7120P

- 50 TB of Object Storage

- Infiniband QDR Interconnect

References:

[1] Wikipedia

Accessing the Facility

Users should use a SSH client or putty to log in to our machines. Unencrypted methods such as telnet, rlogin, and XDM are NOT allowed for accessing our machines. Any SSH client will typically require the user to supply the name or IP address of the machine to which access is sought, as well as a username and a password, before granting access. The interface may differ from platform to platform (PC based clients typically have GUIs while Unix based clients may not).

You should also get into the habit of using secure copy (SCP -- a companion program often bundled with ssh) instead of the traditional ftp utility to transfer files. SCP is more flexible than ftp in that it allows you to transfer directories from one machine to another in addition to just files. There are SCP graphical user interfaces for Windows and for Macs.

If you wish to run programs with graphical interfaces on one of our machines and have it display on your workstation, you will need to have a X11 server or X11 server emulator running on your workstation.

Instructions for using SSH with our systems are listed below for each operating system:

SAMKHYA USERS

Windows Users:

- 1. Please install SSH client or putty.

- 2. Click on the SSH secure shell client icon.

- 3. Please click the Quick Connect Button.

- 4. Please enter the following details: Hostname: samkhyassh.iopb.res.in

- 5. Username: [your user account]. Click on connect, it will prompt for password, and then enter your respective password.

Linux Users:

- 1. Open the Terminal, and type ssh [your account name]@samkhyassh.iopb.res.in.

- 2. It will prompt for password, enter your respective password.

- 3. After successful login, You are on to your home directory.

Getting an Account

Kindly fill up the form available here to get an account.

Nodes Information

Head (Master) Node

It is head node used for login and job submission purpose, this node should not be used for job Execution purpose. Our HPC facility consist two master node for high availability.

To get IP Address/ Hostname to Samkhya HPC, please contact HPC Support.

Compute (Slave) Node

HPC facility consist 60 compute nodes, is a hybrid environment containing CPU, Nvidia GPU and Xeon Phi.

| Sl. No. | Nodes | Type | Cores/ Nodes |

|---|---|---|---|

| 1. | node1-node20 | CPU Only | 480 Cores (24 Core/Node) |

| 2. | node21-node40 | Nvidia GPU and CPU | 480 Cores (24 Core/Node) 40 Cards (2 Per Node) |

| 3. | node41-node60 | Intel Xeon Phi and CPU | 480 Cores (24 Core/Node) 40 Cards (2 Per Node) |

| Total | 1440 CPU Cores 40 Nvidia Cards 40 Xeon Phi Cards |

||

Application

| Application name and version | Directory |

|---|---|

| Cython 0.26 | /data/software/cython/bin |

| Fftw 3.3.7 | /data/software/fftw337/bin |

| Gcc Available versions: 4.8.5, 6.2, 7.2 |

Gcc 4.8.5 { default } - /data/software/gcc485/bin module load gcc62 - /data/software/gcc62/bin module load gcc72 - /data/software/gcc72/bin |

| Globes 3.2.16 |

/data/software/globes3216/bin module load globes3216 |

| Grace 5.1.25 | /data/software/grace5125/grace/bin |

| Gsl 1.15 | /data/software/gsl115/bin |

| Hdf5-1.8.20 | /data/software/hdf5/bin |

| Lapack 3.7.1 |

/data/software/lapack-3.7.1/liblapack.a /data/software/lapack-3.7.1/librefblas.a |

| Lhapdf 5.9.1 | /data/software/lhapdf-5.9.1/bin |

| NuSQUID-master | /data/software/nuSQuIDS-master/bin |

| Squid -master | /data/software/squids/lib |

| Ocaml 4.05.0 | /data/software/ocaml/bin |

| opam | /data/software/opam/bin |

| Pgi 18.4 | /data/software/pgi/linux86-64/18.4/bin |

| Pvm 3.4.5 | /data/software/pvm/pvm3/bin |

| Pythia 8180 | /data/software/pythia/bin |

| root_v5.34.36 | /data/software/root/bin |

| root_v6.14 | /data/software/root-6.14.00/obj/bin |

| Vasp 5.3 | /data/software/vasp |

| Vmd 1.9.3 | /data/software/vmd-1.9.3/bin |

| Gnuplot 5.0.1 | /data/software/gnuplot501/bin |

| Mathematica_11.2 | module load mathematica_11.2 |

| Mathematica_11.3 | module load mathematica_11.3 |

| Matlab v8.1.0.604 | /data/software/Matlab8/bin |

Network

- 1 Gbps LAN connectivity

- QDR 40 Gbps Infiniband Interconnect

Job Scheduler

The job can be submitted to compute nodes through resource manager called Torque and job scheduler is PBS.

Scheduler commands:

| Command | Use and Output | Remarks |

|---|---|---|

| qsub <submission_script> | Job submission with Job ID | This command will throw Job ID |

| qstat | Status of jobs | Option “-f” for full display |

| pbsnodes -a | Show status of all compute nodes | |

| pbsnodes -ln | Show down nodes | |

| qstat -B | Show Queue status and jobs |

Storage

Storage space of 500 GB per user is available to each user with total storage capacity of 50 TB.

There is no data backup mechanism as of now, users are advised to take backup of their important files in storage other than HPC facility.

Submitting Jobs to the Cluster using the scheduler

[A] For Jobs Using only CPUS

Sample serial job script

#!/bin/bash

#PBS -o $PBS_JOBID.out

#PBS -e $PBS_JOBID.err

#PBS -q small

#PBS -l nodes=1:ppn=1

cd $PBS_O_WORKDIR

icc/gcc input_file

./a.out

Save the file as script.cmd, keep your input file and this script file in the same directory and do “qsub script.cmd” Every time you submit a new job kindly use a new directory, do not submit multiple jobs from the same directory.

[B]Sample job script (Openmp jobs/single node jobs)

#!/bin/bash

#PBS -o $PBS_JOBID.out

#PBS -e $PBS_JOBID.err

#PBS -q small

#PBS -l nodes=1:ppn=24

cd $PBS_O_WORKDIR

mpicc input_file

mpirun –np 24 –machinefile $PBS_NODEFILE ./a.out

Save the file as script.cmd, keep your input file and this script file in the same directory and do “qsub script.cmd” Every time you submit a new job kindly use a new directory, do not submit multiple jobs from the same directory.

[C]Sample job script (Parallel jobs/multi node jobs)

#!/bin/bash

#PBS -o $PBS_JOBID.out

#PBS -e $PBS_JOBID.err

#PBS -q medium

#PBS -l nodes=4:ppn=24

cd $PBS_O_WORKDIR

mpicc input_file

mpirun –np 96 –machinefile $PBS_NODEFILE ./a.out

Save the file as script.cmd, keep your input file and this script file in the same directory and do “qsub script.cmd” Every time you submit a new job kindly use a new directory, do not submit multiple jobs from the same directory.

[D]For Jobs Using only GPUS

Sample job script (GPU based jobs)

#!/bin/bash

#PBS -o $PBS_JOBID.out

#PBS -e $PBS_JOBID.err

#PBS -q gpu

#PBS -l nodes=1:ppn=2:gpu

cd $PBS_O_WORKDIR

nvcc input_file

./a.out

Save the file as script.cmd, keep your input file and this script file in the same directory and do “qsub script.cmd” Every time you submit a new job kindly use a new directory, do not submit multiple jobs from the same directory.

[E]To submit Jobs to the phi nodes

#!/bin/bash

#PBS -o $PBS_JOBID.out

#PBS -e $PBS_JOBID.err

#PBS -q phi

#PBS -l nodes=1:ppn=2:phi

cd $PBS_O_WORKDIR

icc input_file

./a.out

Save the file as script.cmd, keep your input file and this script file in the same directory and do “qsub script.cmd” Every time you submit a new job kindly use a new directory, do not submit multiple jobs from the same directory.

Changing a password

To change your password, Once you are logged in to the cluster, type the command passwd and respond to the prompts by entering your old password and your new password. Please remember that this will change your password.

Tutorials

- Step-1:- User login it this process;

ssh -X account_name@10.0.0.27

And after that give password. - Step-2:- [user@konark ~] $ module load gcc72 {because gcc7.2 version is compatible}

- Step-3:- [user@konark ~] $ root -l

{Run the command}

- Step-1:- User login it this process;

ssh -X account_name@10.0.0.27

And after that give password. - Step-2:- [user@konark ~] $ matlab

{Run the command}

- Step-1:- User login it this process;

ssh -X account_name@10.0.0.27

And after that give password. - Step-2:- [user@konark ~] $ module load mathematica_11.2

- Step-2:- [user@konark ~] $ mathematica

{Run the command}